What to Expect from Nvidia's GTC 2024 Keynote on March 18: Insights and Innovations Revealed

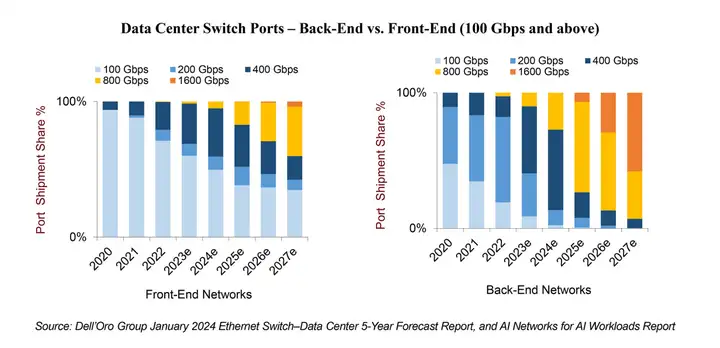

During GTC 2024, NVIDIA announced the latest Blackwell B200 Tensor Core GPU, designed to support large AI language models with trillions of parameters. Blackwell B200 requires advanced 800Gbps networks, which is fully in line with the forecasts outlined in the AI Network Report for AI Workloads. With the traffic of AI workloads expected to grow 10 times every two years, these AI workloads are expected to exceed traditional front-end networks by at least two speed upgrade cycles.

While many topics and innovative solutions were discussed at OFC for cross-datacenter applications and compute interconnects that scale the number of accelerators within the same domain, this post will focus primarily on applications within the datacenter. Specifically, it will focus on scaling the network required to connect the various acceleration nodes in large AI clusters with 1,000s of accelerators. This network is often referred to in the industry as the “AI backend network” (also referred to; and offered by some vendors; as the network for east-west traffic). Here are some of the topics and solutions explored at the show:

1) Linear drive pluggable optics vs. linear receive optics vs. co-packaged optics

Pluggable optics are expected to account for an increasing portion of system-level power consumption, a problem that will be further amplified as cloud service providers build out next-generation AI networks featuring a proliferation of high-speed optics.

At OFC 2023, the introduction of Linear Drive Pluggable Optics (LPOs) enabled significant cost and power savings by removing the DSP, sparking a flurry of testing activity. Fast forward to OFC 2024, and we witnessed nearly 20 demonstrations. Conversations during the event revealed industry-wide enthusiasm for high-quality 100G SerDes integrated into the latest 51.2Tbps network switch silicon, with many eager to take advantage of this advancement that enables the removal of the DSP from optical pluggable modules.

However, despite the excitement, the hesitancy of hyperscalers suggests that LPOs may not be ready for mass adoption. Interviews highlighted that hyperscalers are reluctant to take on the responsibility for qualification and potential failure of LPOs. Instead, they prefer to let switching vendors take on these responsibilities.

In the meantime, early deployments of 51.2Tbps network chips are expected to continue to leverage pluggable optics until at least the middle of next year. However, if LPOs can demonstrate secure deployment at scale while providing hyperscalers with significant power savings—enabling them to deploy more accelerators per rack—then the lure of adopting LPOs may be irresistible. Ultimately, the decision will depend on whether LPOs can deliver on those promises.

Additionally, semi-timed linear optics (HALO), also known as linear receive optics (LROs) were discussed at the show. LROs integrate the DSP chip only on the transmit side (rather than removing it completely in the case of LPOs). While LPOs may be feasible with 100G-PAM4 SerDes, it may become challenging with 200G-PAM4 SerDes, where LROs may be required.

Meanwhile, Co-Packaged Optics (CPOs) are still in the development phase, with large industry players such as Broadcom demonstrating continued development and advancement of the technology. While we believe that current LPO and LRO solutions will certainly have a faster time to market like CPOs, the latter may eventually become the only solution capable of achieving higher speeds at some point in the future.

Before closing this section, don’t forget that, where possible, copper will be a better choice than all of the optical connectivity options discussed above. In short, use copper when possible and use optical when necessary. Interestingly, liquid cooling can facilitate the densification of accelerators within a rack, thereby increasing the use of copper to connect various accelerator nodes within the same rack. The NVIDIA GB200 NVL72, recently announced at GTC, perfectly illustrates this trend.

2) Optical circuit switch

OFC 2024 brought some interesting optical circuit switch (OCS) related announcements. OCS can bring many benefits, including high bandwidth and low network latency, as well as significant capital expenditure savings. This is because OCS switches can significantly reduce the number of electrical switches required in the network, thereby eliminating the expensive optical-electrical-optical conversion associated with electrical switches. In addition, unlike electrical switches, OCS switches are speed-independent and do not require upgrades when servers adopt next-generation optical transceivers.

However, OCS is a new technology, and so far only Google, after years of development, has been able to deploy OCS on a large scale in its data center network. In addition, OCS switches may require changes to the fiber installation base. Therefore, we are still watching whether other cloud service providers, in addition to Google, plan to follow suit and adopt OCS switches in their networks.

3) 3.2 Tbps Path

At OFC 2023, a number of 1.6Tbps optics and transceivers based on 200G/lambda were introduced. At OFC 2024, we witnessed further technical demonstrations of such 1.6 Tbps optics. While we do not expect volume shipments of 1.6Tbps until 2025/2026, the industry has already begun working hard to explore various paths and options to achieve 3.2 Tbps.

Given the complexities encountered in transitioning from 100G-PAM4 electronic channel speeds to 200G-PAM4, initial 3.2 Tbps solutions may use 16 200G-PAM4 channels in the OSFP-XD form factor instead of 8 400G-PAMx channels. It is worth noting that OSFP-XD was originally explored and demonstrated at OFC 2022 two years ago, and it may be put back into use due to the urgency of AI cluster deployment. The 3.2Tbps solution in the OSFP-XD form factor offers higher panel density and cost savings compared to 1.6Tbps. Ultimately, the industry is expected to find a way to achieve 3.2 Tbps based on 8-channel 400G-PAMx SerDes, although it may take some time to achieve this goal.

In summary, OFC 2024 showcased many potential solutions aimed at addressing common challenges: cost, power, and speed. We expect different hyperscalers to make different choices, leading to market diversification. However, one of the key considerations is time to market. It is worth noting that the refresh cycle for AI backend networks is typically around 18 to 24 months, which is much shorter than the 5 to 6 years for traditional front-end networks used to connect general-purpose servers.